Compare commits

39 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

230c8821ec | ||

|

|

ecef3d9538 | ||

|

|

3ecfe4d322 | ||

|

|

6a3f65db7f | ||

|

|

ed490669b1 | ||

|

|

187b38ee22 | ||

|

|

261fe27b2e | ||

|

|

a29f175cba | ||

|

|

f26dacaf22 | ||

|

|

b4d81a9661 | ||

|

|

81c59f02d8 | ||

|

|

8101646ba5 | ||

|

|

93b7220d5f | ||

|

|

18d3236586 | ||

|

|

bc16ae1794 | ||

|

|

d019942278 | ||

|

|

8c5551f93b | ||

|

|

3b15feaf7d | ||

|

|

326ad2c80d | ||

|

|

b4b9f5c1be | ||

|

|

ac5ad9d173 | ||

|

|

7dcd462883 | ||

|

|

5655661778 | ||

|

|

fac7b8551a | ||

|

|

59fed008e0 | ||

|

|

286e8f8590 | ||

|

|

4daf261d95 | ||

|

|

b94f494b24 | ||

|

|

4c72746286 | ||

|

|

ae2c4b1ea9 | ||

|

|

abd49bca8e | ||

|

|

5820a17659 | ||

|

|

a6a8cca427 | ||

|

|

faf478be99 | ||

|

|

f7c2905aa4 | ||

|

|

e283c6eba1 | ||

|

|

39d3459555 | ||

|

|

f0ceebf55d | ||

|

|

c6f11e63e4 |

1

.gitattributes

vendored

Normal file

1

.gitattributes

vendored

Normal file

@@ -0,0 +1 @@

|

||||

* text=lf

|

||||

BIN

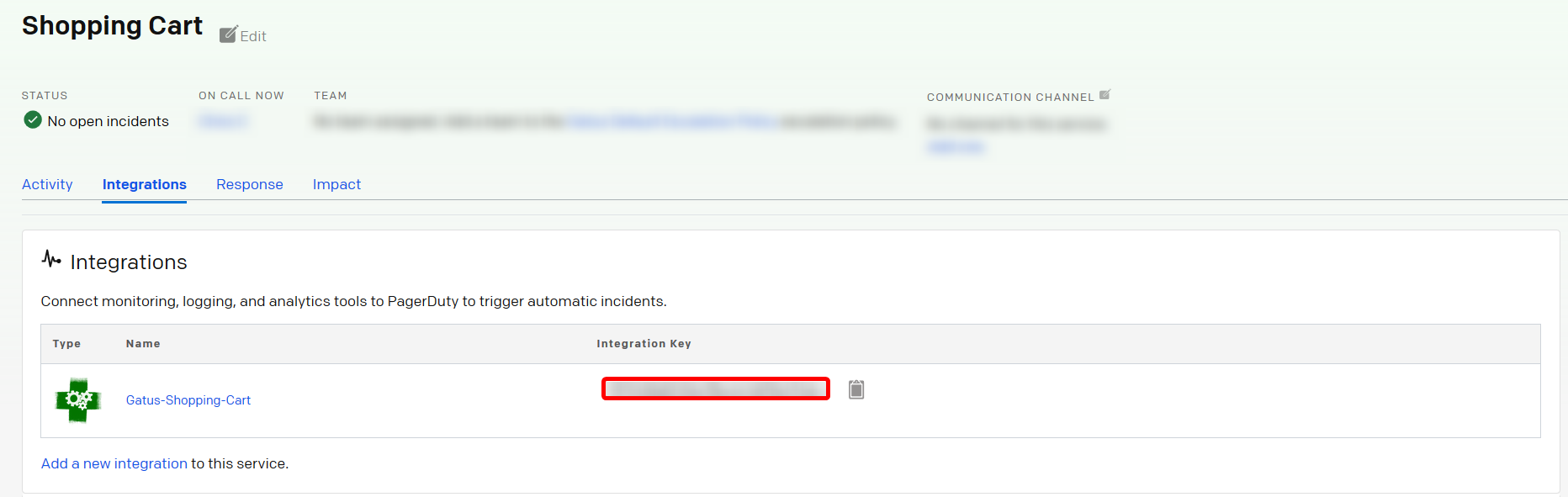

.github/assets/pagerduty-integration-key.png

vendored

Normal file

BIN

.github/assets/pagerduty-integration-key.png

vendored

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 86 KiB |

6

.github/workflows/build.yml

vendored

6

.github/workflows/build.yml

vendored

@@ -14,13 +14,13 @@ jobs:

|

||||

runs-on: ubuntu-latest

|

||||

timeout-minutes: 5

|

||||

steps:

|

||||

- name: Set up Go 1.14

|

||||

- name: Set up Go 1.15

|

||||

uses: actions/setup-go@v1

|

||||

with:

|

||||

go-version: 1.14

|

||||

go-version: 1.15

|

||||

id: go

|

||||

- name: Check out code into the Go module directory

|

||||

uses: actions/checkout@v1

|

||||

uses: actions/checkout@v2

|

||||

- name: Build binary to make sure it works

|

||||

run: go build -mod vendor

|

||||

- name: Test

|

||||

|

||||

218

README.md

218

README.md

@@ -1,13 +1,13 @@

|

||||

|

||||

|

||||

|

||||

[](https://goreportcard.com/report/github.com/TwinProduction/gatus)

|

||||

[](https://goreportcard.com/report/github.com/TwinProduction/gatus)

|

||||

[](https://cloud.docker.com/repository/docker/twinproduction/gatus)

|

||||

|

||||

A service health dashboard in Go that is meant to be used as a docker

|

||||

image with a custom configuration file.

|

||||

|

||||

I personally deploy it in my Kubernetes cluster and have it monitor the status of my

|

||||

I personally deploy it in my Kubernetes cluster and let it monitor the status of my

|

||||

core applications: https://status.twinnation.org/

|

||||

|

||||

|

||||

@@ -17,6 +17,8 @@ core applications: https://status.twinnation.org/

|

||||

- [Usage](#usage)

|

||||

- [Configuration](#configuration)

|

||||

- [Conditions](#conditions)

|

||||

- [Placeholders](#placeholders)

|

||||

- [Functions](#functions)

|

||||

- [Alerting](#alerting)

|

||||

- [Configuring Slack alerts](#configuring-slack-alerts)

|

||||

- [Configuring PagerDuty alerts](#configuring-pagerduty-alerts)

|

||||

@@ -27,6 +29,9 @@ core applications: https://status.twinnation.org/

|

||||

- [Using in Production](#using-in-production)

|

||||

- [FAQ](#faq)

|

||||

- [Sending a GraphQL request](#sending-a-graphql-request)

|

||||

- [Recommended interval](#recommended-interval)

|

||||

- [Default timeouts](#default-timeouts)

|

||||

- [Monitoring a TCP service](#monitoring-a-tcp-service)

|

||||

|

||||

|

||||

## Features

|

||||

@@ -35,7 +40,7 @@ The main features of Gatus are:

|

||||

- **Highly flexible health check conditions**: While checking the response status may be enough for some use cases, Gatus goes much further and allows you to add conditions on the response time, the response body and even the IP address.

|

||||

- **Ability to use Gatus for user acceptance tests**: Thanks to the point above, you can leverage this application to create automated user acceptance tests.

|

||||

- **Very easy to configure**: Not only is the configuration designed to be as readable as possible, it's also extremely easy to add a new service or a new endpoint to monitor.

|

||||

- **Alerting**: While having a pretty visual dashboard is useful to keep track of the state of your application(s), you probably don't want to stare at it all day. Thus, notifications via Slack are supported out of the box with the ability to configure a custom alerting provider for any needs you might have, whether it be a different provider like PagerDuty or a custom application that manages automated rollbacks.

|

||||

- **Alerting**: While having a pretty visual dashboard is useful to keep track of the state of your application(s), you probably don't want to stare at it all day. Thus, notifications via Slack, PagerDuty and Twilio are supported out of the box with the ability to configure a custom alerting provider for any needs you might have, whether it be a different provider or a custom application that manages automated rollbacks.

|

||||

- **Metrics**

|

||||

- **Low resource consumption**: As with most Go applications, the resource footprint that this application requires is negligibly small.

|

||||

|

||||

@@ -74,69 +79,99 @@ Note that you can also add environment variables in the configuration file (i.e.

|

||||

|

||||

### Configuration

|

||||

|

||||

| Parameter | Description | Default |

|

||||

| --------------------------------------------- | -------------------------------------------------------------------------- | -------------- |

|

||||

| `debug` | Whether to enable debug logs | `false` |

|

||||

| `metrics` | Whether to expose metrics at /metrics | `false` |

|

||||

| `services` | List of services to monitor | Required `[]` |

|

||||

| `services[].name` | Name of the service. Can be anything. | Required `""` |

|

||||

| `services[].url` | URL to send the request to | Required `""` |

|

||||

| `services[].conditions` | Conditions used to determine the health of the service | `[]` |

|

||||

| `services[].interval` | Duration to wait between every status check | `60s` |

|

||||

| `services[].method` | Request method | `GET` |

|

||||

| `services[].graphql` | Whether to wrap the body in a query param (`{"query":"$body"}`) | `false` |

|

||||

| `services[].body` | Request body | `""` |

|

||||

| `services[].headers` | Request headers | `{}` |

|

||||

| `services[].alerts[].type` | Type of alert. Valid types: `slack`, `twilio`, `custom` | Required `""` |

|

||||

| `services[].alerts[].enabled` | Whether to enable the alert | `false` |

|

||||

| `services[].alerts[].threshold` | Number of failures in a row needed before triggering the alert | `3` |

|

||||

| `services[].alerts[].description` | Description of the alert. Will be included in the alert sent | `""` |

|

||||

| `services[].alerts[].send-on-resolved` | Whether to send a notification once a triggered alert subsides | `false` |

|

||||

| `services[].alerts[].success-before-resolved` | Number of successes in a row needed before sending a resolved notification | `2` |

|

||||

| `alerting` | Configuration for alerting | `{}` |

|

||||

| `alerting.slack` | Webhook to use for alerts of type `slack` | `""` |

|

||||

| `alerting.twilio` | Settings for alerts of type `twilio` | `""` |

|

||||

| `alerting.twilio.sid` | Twilio account SID | Required `""` |

|

||||

| `alerting.twilio.token` | Twilio auth token | Required `""` |

|

||||

| `alerting.twilio.from` | Number to send Twilio alerts from | Required `""` |

|

||||

| `alerting.twilio.to` | Number to send twilio alerts to | Required `""` |

|

||||

| `alerting.custom` | Configuration for custom actions on failure or alerts | `""` |

|

||||

| `alerting.custom.url` | Custom alerting request url | `""` |

|

||||

| `alerting.custom.body` | Custom alerting request body. | `""` |

|

||||

| `alerting.custom.headers` | Custom alerting request headers | `{}` |

|

||||

| Parameter | Description | Default |

|

||||

|:---------------------------------------- |:----------------------------------------------------------------------------- |:-------------- |

|

||||

| `debug` | Whether to enable debug logs | `false` |

|

||||

| `metrics` | Whether to expose metrics at /metrics | `false` |

|

||||

| `services` | List of services to monitor | Required `[]` |

|

||||

| `services[].name` | Name of the service. Can be anything. | Required `""` |

|

||||

| `services[].url` | URL to send the request to | Required `""` |

|

||||

| `services[].conditions` | Conditions used to determine the health of the service | `[]` |

|

||||

| `services[].insecure` | Whether to skip verifying the server's certificate chain and host name | `false` |

|

||||

| `services[].interval` | Duration to wait between every status check | `60s` |

|

||||

| `services[].method` | Request method | `GET` |

|

||||

| `services[].graphql` | Whether to wrap the body in a query param (`{"query":"$body"}`) | `false` |

|

||||

| `services[].body` | Request body | `""` |

|

||||

| `services[].headers` | Request headers | `{}` |

|

||||

| `services[].alerts[].type` | Type of alert. Valid types: `slack`, `pagerduty`, `twilio`, `custom` | Required `""` |

|

||||

| `services[].alerts[].enabled` | Whether to enable the alert | `false` |

|

||||

| `services[].alerts[].failure-threshold` | Number of failures in a row needed before triggering the alert | `3` |

|

||||

| `services[].alerts[].success-threshold` | Number of successes in a row before an ongoing incident is marked as resolved | `2` |

|

||||

| `services[].alerts[].send-on-resolved` | Whether to send a notification once a triggered alert is marked as resolved | `false` |

|

||||

| `services[].alerts[].description` | Description of the alert. Will be included in the alert sent | `""` |

|

||||

| `alerting` | Configuration for alerting | `{}` |

|

||||

| `alerting.slack` | Configuration for alerts of type `slack` | `""` |

|

||||

| `alerting.slack.webhook-url` | Slack Webhook URL | Required `""` |

|

||||

| `alerting.pagerduty` | Configuration for alerts of type `pagerduty` | `""` |

|

||||

| `alerting.pagerduty.integration-key` | PagerDuty Events API v2 integration key. | Required `""` |

|

||||

| `alerting.twilio` | Settings for alerts of type `twilio` | `""` |

|

||||

| `alerting.twilio.sid` | Twilio account SID | Required `""` |

|

||||

| `alerting.twilio.token` | Twilio auth token | Required `""` |

|

||||

| `alerting.twilio.from` | Number to send Twilio alerts from | Required `""` |

|

||||

| `alerting.twilio.to` | Number to send twilio alerts to | Required `""` |

|

||||

| `alerting.custom` | Configuration for custom actions on failure or alerts | `""` |

|

||||

| `alerting.custom.url` | Custom alerting request url | Required `""` |

|

||||

| `alerting.custom.body` | Custom alerting request body. | `""` |

|

||||

| `alerting.custom.headers` | Custom alerting request headers | `{}` |

|

||||

|

||||

|

||||

### Conditions

|

||||

|

||||

Here are some examples of conditions you can use:

|

||||

|

||||

| Condition | Description | Passing values | Failing values |

|

||||

| -----------------------------| ------------------------------------------------------- | ------------------------ | -------------- |

|

||||

| `[STATUS] == 200` | Status must be equal to 200 | 200 | 201, 404, ... |

|

||||

| `[STATUS] < 300` | Status must lower than 300 | 200, 201, 299 | 301, 302, ... |

|

||||

| `[STATUS] <= 299` | Status must be less than or equal to 299 | 200, 201, 299 | 301, 302, ... |

|

||||

| `[STATUS] > 400` | Status must be greater than 400 | 401, 402, 403, 404 | 400, 200, ... |

|

||||

| `[RESPONSE_TIME] < 500` | Response time must be below 500ms | 100ms, 200ms, 300ms | 500ms, 501ms |

|

||||

| `[BODY] == 1` | The body must be equal to 1 | 1 | Anything else |

|

||||

| `[BODY].data.id == 1` | The jsonpath `$.data.id` is equal to 1 | `{"data":{"id":1}}` | |

|

||||

| `[BODY].data[0].id == 1` | The jsonpath `$.data[0].id` is equal to 1 | `{"data":[{"id":1}]}` | |

|

||||

| `len([BODY].data) > 0` | Array at jsonpath `$.data` has less than 5 elements | `{"data":[{"id":1}]}` | |

|

||||

| `len([BODY].name) == 8` | String at jsonpath `$.name` has a length of 8 | `{"name":"john.doe"}` | `{"name":"bob"}` |

|

||||

| Condition | Description | Passing values | Failing values |

|

||||

|:-----------------------------|:------------------------------------------------------- |:-------------------------- | -------------- |

|

||||

| `[STATUS] == 200` | Status must be equal to 200 | 200 | 201, 404, ... |

|

||||

| `[STATUS] < 300` | Status must lower than 300 | 200, 201, 299 | 301, 302, ... |

|

||||

| `[STATUS] <= 299` | Status must be less than or equal to 299 | 200, 201, 299 | 301, 302, ... |

|

||||

| `[STATUS] > 400` | Status must be greater than 400 | 401, 402, 403, 404 | 400, 200, ... |

|

||||

| `[CONNECTED] == true` | Connection to host must've been successful | true, false | |

|

||||

| `[RESPONSE_TIME] < 500` | Response time must be below 500ms | 100ms, 200ms, 300ms | 500ms, 501ms |

|

||||

| `[IP] == 127.0.0.1` | Target IP must be 127.0.0.1 | 127.0.0.1 | 0.0.0.0 |

|

||||

| `[BODY] == 1` | The body must be equal to 1 | 1 | `{}`, `2`, ... |

|

||||

| `[BODY].user.name == john` | JSONPath value of `$.user.name` is equal to `john` | `{"user":{"name":"john"}}` | |

|

||||

| `[BODY].data[0].id == 1` | JSONPath value of `$.data[0].id` is equal to 1 | `{"data":[{"id":1}]}` | |

|

||||

| `[BODY].age == [BODY].id` | JSONPath value of `$.age` is equal JSONPath `$.id` | `{"age":1,"id":1}` | |

|

||||

| `len([BODY].data) < 5` | Array at JSONPath `$.data` has less than 5 elements | `{"data":[{"id":1}]}` | |

|

||||

| `len([BODY].name) == 8` | String at JSONPath `$.name` has a length of 8 | `{"name":"john.doe"}` | `{"name":"bob"}` |

|

||||

| `[BODY].name == pat(john*)` | String at JSONPath `$.name` matches pattern `john*` | `{"name":"john.doe"}` | `{"name":"bob"}` |

|

||||

|

||||

|

||||

#### Placeholders

|

||||

|

||||

| Placeholder | Description | Example of resolved value |

|

||||

|:----------------------- |:------------------------------------------------------- |:------------------------- |

|

||||

| `[STATUS]` | Resolves into the HTTP status of the request | 404

|

||||

| `[RESPONSE_TIME]` | Resolves into the response time the request took, in ms | 10

|

||||

| `[IP]` | Resolves into the IP of the target host | 192.168.0.232

|

||||

| `[BODY]` | Resolves into the response body. Supports JSONPath. | `{"name":"john.doe"}`

|

||||

| `[CONNECTED]` | Resolves into whether a connection could be established | `true`

|

||||

|

||||

|

||||

#### Functions

|

||||

|

||||

| Function | Description | Example |

|

||||

|:-----------|:---------------------------------------------------------------------------------------------------------------- |:-------------------------- |

|

||||

| `len` | Returns the length of the object/slice. Works only with the `[BODY]` placeholder. | `len([BODY].username) > 8`

|

||||

| `pat` | Specifies that the string passed as parameter should be evaluated as a pattern. Works only with `==` and `!=`. | `[IP] == pat(192.168.*)`

|

||||

|

||||

**NOTE**: Use `pat` only when you need to. `[STATUS] == pat(2*)` is a lot more expensive than `[STATUS] < 300`.

|

||||

|

||||

|

||||

|

||||

### Alerting

|

||||

|

||||

|

||||

|

||||

#### Configuring Slack alerts

|

||||

|

||||

```yaml

|

||||

alerting:

|

||||

slack: "https://hooks.slack.com/services/**********/**********/**********"

|

||||

slack:

|

||||

webhook-url: "https://hooks.slack.com/services/**********/**********/**********"

|

||||

services:

|

||||

- name: twinnation

|

||||

interval: 30s

|

||||

url: "https://twinnation.org/health"

|

||||

interval: 30s

|

||||

alerts:

|

||||

- type: slack

|

||||

enabled: true

|

||||

@@ -144,7 +179,7 @@ services:

|

||||

send-on-resolved: true

|

||||

- type: slack

|

||||

enabled: true

|

||||

threshold: 5

|

||||

failure-threshold: 5

|

||||

description: "healthcheck failed 5 times in a row"

|

||||

send-on-resolved: true

|

||||

conditions:

|

||||

@@ -167,18 +202,19 @@ PagerDuty instead.

|

||||

|

||||

```yaml

|

||||

alerting:

|

||||

pagerduty: "********************************"

|

||||

pagerduty:

|

||||

integration-key: "********************************"

|

||||

services:

|

||||

- name: twinnation

|

||||

interval: 30s

|

||||

url: "https://twinnation.org/health"

|

||||

interval: 30s

|

||||

alerts:

|

||||

- type: pagerduty

|

||||

enabled: true

|

||||

threshold: 3

|

||||

description: "healthcheck failed 3 times in a row"

|

||||

failure-threshold: 3

|

||||

success-threshold: 5

|

||||

send-on-resolved: true

|

||||

success-before-resolved: 5

|

||||

description: "healthcheck failed 3 times in a row"

|

||||

conditions:

|

||||

- "[STATUS] == 200"

|

||||

- "[BODY].status == UP"

|

||||

@@ -202,7 +238,8 @@ services:

|

||||

alerts:

|

||||

- type: twilio

|

||||

enabled: true

|

||||

threshold: 5

|

||||

failure-threshold: 5

|

||||

send-on-resolved: true

|

||||

description: "healthcheck failed 5 times in a row"

|

||||

conditions:

|

||||

- "[STATUS] == 200"

|

||||

@@ -239,12 +276,13 @@ alerting:

|

||||

}

|

||||

services:

|

||||

- name: twinnation

|

||||

interval: 30s

|

||||

url: "https://twinnation.org/health"

|

||||

interval: 30s

|

||||

alerts:

|

||||

- type: custom

|

||||

enabled: true

|

||||

threshold: 10

|

||||

failure-threshold: 10

|

||||

success-threshold: 3

|

||||

send-on-resolved: true

|

||||

description: "healthcheck failed 10 times in a row"

|

||||

conditions:

|

||||

@@ -256,10 +294,17 @@ services:

|

||||

|

||||

## Docker

|

||||

|

||||

Other than using one of the examples provided in the `examples` folder, you can also try it out locally by

|

||||

creating a configuration file - we'll call it `config.yaml` for this example - and running the following

|

||||

command:

|

||||

```

|

||||

docker run -p 8080:8080 --name gatus twinproduction/gatus

|

||||

docker run -p 8080:8080 --mount type=bind,source="$(pwd)"/config.yaml,target=/config/config.yaml --name gatus twinproduction/gatus

|

||||

```

|

||||

|

||||

If you're on Windows, replace `"$(pwd)"` by the absolute path to your current directory, e.g.:

|

||||

```

|

||||

docker run -p 8080:8080 --mount type=bind,source=C:/Users/Chris/Desktop/config.yaml,target=/config/config.yaml --name gatus twinproduction/gatus

|

||||

```

|

||||

|

||||

## Running the tests

|

||||

|

||||

@@ -306,3 +351,60 @@ will send a `POST` request to `http://localhost:8080/playground` with the follow

|

||||

```json

|

||||

{"query":" {\n user(gender: \"female\") {\n id\n name\n gender\n avatar\n }\n }"}

|

||||

```

|

||||

|

||||

|

||||

### Recommended interval

|

||||

|

||||

To ensure that Gatus provides reliable and accurate results (i.e. response time), Gatus only evaluates one service at a time.

|

||||

In other words, even if you have multiple services with the exact same interval, they will not execute at the same time.

|

||||

|

||||

You can test this yourself by running Gatus with several services configured with a very short, unrealistic interval,

|

||||

such as 1ms. You'll notice that the response time does not fluctuate - that is because while services are evaluated on

|

||||

different goroutines, there's a global lock that prevents multiple services from running at the same time.

|

||||

|

||||

Unfortunately, there is a drawback. If you have a lot of services, including some that are very slow or prone to time out (the default

|

||||

time out is 10s for HTTP and 5s for TCP), then it means that for the entire duration of the request, no other services can be evaluated.

|

||||

|

||||

**This does mean that Gatus will be unable to evaluate the health of other services**.

|

||||

The interval does not include the duration of the request itself, which means that if a service has an interval of 30s

|

||||

and the request takes 2s to complete, the timestamp between two evaluations will be 32s, not 30s.

|

||||

|

||||

While this does not prevent Gatus' from performing health checks on all other services, it may cause Gatus to be unable

|

||||

to respect the configured interval, for instance:

|

||||

- Service A has an interval of 5s, and times out after 10s to complete

|

||||

- Service B has an interval of 5s, and takes 1ms to complete

|

||||

- Service B will be unable to run every 5s, because service A's health evaluation takes longer than its interval

|

||||

|

||||

To sum it up, while Gatus can really handle any interval you throw at it, you're better off having slow requests with

|

||||

higher interval.

|

||||

|

||||

As a rule of the thumb, I personally set interval for more complex health checks to `5m` (5 minutes) and

|

||||

simple health checks used for alerting (PagerDuty/Twilio) to `30s`.

|

||||

|

||||

|

||||

### Default timeouts

|

||||

|

||||

| Protocol | Timeout |

|

||||

|:-------- |:------- |

|

||||

| HTTP | 10s

|

||||

| TCP | 5s

|

||||

|

||||

|

||||

### Monitoring a TCP service

|

||||

|

||||

By prefixing `services[].url` with `tcp:\\`, you can monitor TCP services at a very basic level:

|

||||

|

||||

```yaml

|

||||

- name: redis

|

||||

url: "tcp://127.0.0.1:6379"

|

||||

interval: 30s

|

||||

conditions:

|

||||

- "[CONNECTED] == true"

|

||||

```

|

||||

|

||||

Placeholders `[STATUS]` and `[BODY]` as well as the fields `services[].body`, `services[].insecure`,

|

||||

`services[].headers`, `services[].method` and `services[].graphql` are not supported for TCP services.

|

||||

|

||||

**NOTE**: `[CONNECTED] == true` does not guarantee that the service itself is healthy - it only guarantees that there's

|

||||

something at the given address listening to the given port, and that a connection to that address was successfully

|

||||

established.

|

||||

|

||||

@@ -1,158 +0,0 @@

|

||||

package alerting

|

||||

|

||||

import (

|

||||

"encoding/json"

|

||||

"fmt"

|

||||

"github.com/TwinProduction/gatus/config"

|

||||

"github.com/TwinProduction/gatus/core"

|

||||

"log"

|

||||

)

|

||||

|

||||

// Handle takes care of alerts to resolve and alerts to trigger based on result success or failure

|

||||

func Handle(service *core.Service, result *core.Result) {

|

||||

cfg := config.Get()

|

||||

if cfg.Alerting == nil {

|

||||

return

|

||||

}

|

||||

if result.Success {

|

||||

handleAlertsToResolve(service, result, cfg)

|

||||

} else {

|

||||

handleAlertsToTrigger(service, result, cfg)

|

||||

}

|

||||

}

|

||||

|

||||

func handleAlertsToTrigger(service *core.Service, result *core.Result, cfg *config.Config) {

|

||||

service.NumberOfSuccessesInARow = 0

|

||||

service.NumberOfFailuresInARow++

|

||||

for _, alert := range service.Alerts {

|

||||

// If the alert hasn't been triggered, move to the next one

|

||||

if !alert.Enabled || alert.Threshold != service.NumberOfFailuresInARow {

|

||||

continue

|

||||

}

|

||||

if alert.Triggered {

|

||||

if cfg.Debug {

|

||||

log.Printf("[alerting][handleAlertsToTrigger] Alert with description='%s' has already been triggered, skipping", alert.Description)

|

||||

}

|

||||

continue

|

||||

}

|

||||

var alertProvider *core.CustomAlertProvider

|

||||

if alert.Type == core.SlackAlert {

|

||||

if len(cfg.Alerting.Slack) > 0 {

|

||||

log.Printf("[alerting][handleAlertsToTrigger] Sending Slack alert because alert with description='%s' has been triggered", alert.Description)

|

||||

alertProvider = core.CreateSlackCustomAlertProvider(cfg.Alerting.Slack, service, alert, result, false)

|

||||

} else {

|

||||

log.Printf("[alerting][handleAlertsToTrigger] Not sending Slack alert despite being triggered, because there is no Slack webhook configured")

|

||||

}

|

||||

} else if alert.Type == core.PagerDutyAlert {

|

||||

if len(cfg.Alerting.PagerDuty) > 0 {

|

||||

log.Printf("[alerting][handleAlertsToTrigger] Sending PagerDuty alert because alert with description='%s' has been triggered", alert.Description)

|

||||

alertProvider = core.CreatePagerDutyCustomAlertProvider(cfg.Alerting.PagerDuty, "trigger", "", service, fmt.Sprintf("TRIGGERED: %s - %s", service.Name, alert.Description))

|

||||

} else {

|

||||

log.Printf("[alerting][handleAlertsToTrigger] Not sending PagerDuty alert despite being triggered, because PagerDuty isn't configured properly")

|

||||

}

|

||||

} else if alert.Type == core.TwilioAlert {

|

||||

if cfg.Alerting.Twilio != nil && cfg.Alerting.Twilio.IsValid() {

|

||||

log.Printf("[alerting][handleAlertsToTrigger] Sending Twilio alert because alert with description='%s' has been triggered", alert.Description)

|

||||

alertProvider = core.CreateTwilioCustomAlertProvider(cfg.Alerting.Twilio, fmt.Sprintf("TRIGGERED: %s - %s", service.Name, alert.Description))

|

||||

} else {

|

||||

log.Printf("[alerting][handleAlertsToTrigger] Not sending Twilio alert despite being triggered, because Twilio config settings missing")

|

||||

}

|

||||

} else if alert.Type == core.CustomAlert {

|

||||

if cfg.Alerting.Custom != nil && cfg.Alerting.Custom.IsValid() {

|

||||

log.Printf("[alerting][handleAlertsToTrigger] Sending custom alert because alert with description='%s' has been triggered", alert.Description)

|

||||

alertProvider = &core.CustomAlertProvider{

|

||||

Url: cfg.Alerting.Custom.Url,

|

||||

Method: cfg.Alerting.Custom.Method,

|

||||

Body: cfg.Alerting.Custom.Body,

|

||||

Headers: cfg.Alerting.Custom.Headers,

|

||||

}

|

||||

} else {

|

||||

log.Printf("[alerting][handleAlertsToTrigger] Not sending custom alert despite being triggered, because there is no custom url configured")

|

||||

}

|

||||

}

|

||||

if alertProvider != nil {

|

||||

// TODO: retry on error

|

||||

var err error

|

||||

if alert.Type == core.PagerDutyAlert {

|

||||

var body []byte

|

||||

body, err = alertProvider.Send(service.Name, alert.Description, true)

|

||||

if err == nil {

|

||||

var response pagerDutyResponse

|

||||

err = json.Unmarshal(body, &response)

|

||||

if err != nil {

|

||||

log.Printf("[alerting][handleAlertsToTrigger] Ran into error unmarshaling pager duty response: %s", err.Error())

|

||||

} else {

|

||||

alert.ResolveKey = response.DedupKey

|

||||

}

|

||||

}

|

||||

} else {

|

||||

_, err = alertProvider.Send(service.Name, alert.Description, false)

|

||||

}

|

||||

if err != nil {

|

||||

log.Printf("[alerting][handleAlertsToTrigger] Ran into error sending an alert: %s", err.Error())

|

||||

} else {

|

||||

alert.Triggered = true

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

func handleAlertsToResolve(service *core.Service, result *core.Result, cfg *config.Config) {

|

||||

service.NumberOfSuccessesInARow++

|

||||

for _, alert := range service.Alerts {

|

||||

if !alert.Enabled || !alert.Triggered || alert.SuccessBeforeResolved > service.NumberOfSuccessesInARow {

|

||||

continue

|

||||

}

|

||||

alert.Triggered = false

|

||||

if !alert.SendOnResolved {

|

||||

continue

|

||||

}

|

||||

var alertProvider *core.CustomAlertProvider

|

||||

if alert.Type == core.SlackAlert {

|

||||

if len(cfg.Alerting.Slack) > 0 {

|

||||

log.Printf("[alerting][handleAlertsToResolve] Sending Slack alert because alert with description='%s' has been resolved", alert.Description)

|

||||

alertProvider = core.CreateSlackCustomAlertProvider(cfg.Alerting.Slack, service, alert, result, true)

|

||||

} else {

|

||||

log.Printf("[alerting][handleAlertsToResolve] Not sending Slack alert despite being resolved, because there is no Slack webhook configured")

|

||||

}

|

||||

} else if alert.Type == core.PagerDutyAlert {

|

||||

if len(cfg.Alerting.PagerDuty) > 0 {

|

||||

log.Printf("[alerting][handleAlertsToResolve] Sending PagerDuty alert because alert with description='%s' has been resolved", alert.Description)

|

||||

alertProvider = core.CreatePagerDutyCustomAlertProvider(cfg.Alerting.PagerDuty, "resolve", alert.ResolveKey, service, fmt.Sprintf("RESOLVED: %s - %s", service.Name, alert.Description))

|

||||

} else {

|

||||

log.Printf("[alerting][handleAlertsToResolve] Not sending PagerDuty alert despite being resolved, because PagerDuty isn't configured properly")

|

||||

}

|

||||

} else if alert.Type == core.TwilioAlert {

|

||||

if cfg.Alerting.Twilio != nil && cfg.Alerting.Twilio.IsValid() {

|

||||

log.Printf("[alerting][handleAlertsToResolve] Sending Twilio alert because alert with description='%s' has been resolved", alert.Description)

|

||||

alertProvider = core.CreateTwilioCustomAlertProvider(cfg.Alerting.Twilio, fmt.Sprintf("RESOLVED: %s - %s", service.Name, alert.Description))

|

||||

} else {

|

||||

log.Printf("[alerting][handleAlertsToResolve] Not sending Twilio alert despite being resolved, because Twilio isn't configured properly")

|

||||

}

|

||||

} else if alert.Type == core.CustomAlert {

|

||||

if cfg.Alerting.Custom != nil && cfg.Alerting.Custom.IsValid() {

|

||||

log.Printf("[alerting][handleAlertsToResolve] Sending custom alert because alert with description='%s' has been resolved", alert.Description)

|

||||

alertProvider = &core.CustomAlertProvider{

|

||||

Url: cfg.Alerting.Custom.Url,

|

||||

Method: cfg.Alerting.Custom.Method,

|

||||

Body: cfg.Alerting.Custom.Body,

|

||||

Headers: cfg.Alerting.Custom.Headers,

|

||||

}

|

||||

} else {

|

||||

log.Printf("[alerting][handleAlertsToResolve] Not sending custom alert despite being resolved, because the custom provider isn't configured properly")

|

||||

}

|

||||

}

|

||||

if alertProvider != nil {

|

||||

// TODO: retry on error

|

||||

_, err := alertProvider.Send(service.Name, alert.Description, true)

|

||||

if err != nil {

|

||||

log.Printf("[alerting][handleAlertsToResolve] Ran into error sending an alert: %s", err.Error())

|

||||

} else {

|

||||

if alert.Type == core.PagerDutyAlert {

|

||||

alert.ResolveKey = ""

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

service.NumberOfFailuresInARow = 0

|

||||

}

|

||||

15

alerting/config.go

Normal file

15

alerting/config.go

Normal file

@@ -0,0 +1,15 @@

|

||||

package alerting

|

||||

|

||||

import (

|

||||

"github.com/TwinProduction/gatus/alerting/provider/custom"

|

||||

"github.com/TwinProduction/gatus/alerting/provider/pagerduty"

|

||||

"github.com/TwinProduction/gatus/alerting/provider/slack"

|

||||

"github.com/TwinProduction/gatus/alerting/provider/twilio"

|

||||

)

|

||||

|

||||

type Config struct {

|

||||

Slack *slack.AlertProvider `yaml:"slack"`

|

||||

PagerDuty *pagerduty.AlertProvider `yaml:"pagerduty"`

|

||||

Twilio *twilio.AlertProvider `yaml:"twilio"`

|

||||

Custom *custom.AlertProvider `yaml:"custom"`

|

||||

}

|

||||

@@ -1,7 +0,0 @@

|

||||

package alerting

|

||||

|

||||

type pagerDutyResponse struct {

|

||||

Status string `json:"status"`

|

||||

Message string `json:"message"`

|

||||

DedupKey string `json:"dedup_key"`

|

||||

}

|

||||

89

alerting/provider/custom/custom.go

Normal file

89

alerting/provider/custom/custom.go

Normal file

@@ -0,0 +1,89 @@

|

||||

package custom

|

||||

|

||||

import (

|

||||

"bytes"

|

||||

"fmt"

|

||||

"github.com/TwinProduction/gatus/client"

|

||||

"github.com/TwinProduction/gatus/core"

|

||||

"io/ioutil"

|

||||

"net/http"

|

||||

"strings"

|

||||

)

|

||||

|

||||

// AlertProvider is the configuration necessary for sending an alert using a custom HTTP request

|

||||

// Technically, all alert providers should be reachable using the custom alert provider

|

||||

type AlertProvider struct {

|

||||

Url string `yaml:"url"`

|

||||

Method string `yaml:"method,omitempty"`

|

||||

Body string `yaml:"body,omitempty"`

|

||||

Headers map[string]string `yaml:"headers,omitempty"`

|

||||

}

|

||||

|

||||

// IsValid returns whether the provider's configuration is valid

|

||||

func (provider *AlertProvider) IsValid() bool {

|

||||

return len(provider.Url) > 0

|

||||

}

|

||||

|

||||

// ToCustomAlertProvider converts the provider into a custom.AlertProvider

|

||||

func (provider *AlertProvider) ToCustomAlertProvider(service *core.Service, alert *core.Alert, result *core.Result, resolved bool) *AlertProvider {

|

||||

return provider

|

||||

}

|

||||

|

||||

func (provider *AlertProvider) buildRequest(serviceName, alertDescription string, resolved bool) *http.Request {

|

||||

body := provider.Body

|

||||

providerUrl := provider.Url

|

||||

method := provider.Method

|

||||

if strings.Contains(body, "[ALERT_DESCRIPTION]") {

|

||||

body = strings.ReplaceAll(body, "[ALERT_DESCRIPTION]", alertDescription)

|

||||

}

|

||||

if strings.Contains(body, "[SERVICE_NAME]") {

|

||||

body = strings.ReplaceAll(body, "[SERVICE_NAME]", serviceName)

|

||||

}

|

||||

if strings.Contains(body, "[ALERT_TRIGGERED_OR_RESOLVED]") {

|

||||

if resolved {

|

||||

body = strings.ReplaceAll(body, "[ALERT_TRIGGERED_OR_RESOLVED]", "RESOLVED")

|

||||

} else {

|

||||

body = strings.ReplaceAll(body, "[ALERT_TRIGGERED_OR_RESOLVED]", "TRIGGERED")

|

||||

}

|

||||

}

|

||||

if strings.Contains(providerUrl, "[ALERT_DESCRIPTION]") {

|

||||

providerUrl = strings.ReplaceAll(providerUrl, "[ALERT_DESCRIPTION]", alertDescription)

|

||||

}

|

||||

if strings.Contains(providerUrl, "[SERVICE_NAME]") {

|

||||

providerUrl = strings.ReplaceAll(providerUrl, "[SERVICE_NAME]", serviceName)

|

||||

}

|

||||

if strings.Contains(providerUrl, "[ALERT_TRIGGERED_OR_RESOLVED]") {

|

||||

if resolved {

|

||||

providerUrl = strings.ReplaceAll(providerUrl, "[ALERT_TRIGGERED_OR_RESOLVED]", "RESOLVED")

|

||||

} else {

|

||||

providerUrl = strings.ReplaceAll(providerUrl, "[ALERT_TRIGGERED_OR_RESOLVED]", "TRIGGERED")

|

||||

}

|

||||

}

|

||||

if len(method) == 0 {

|

||||

method = "GET"

|

||||

}

|

||||

bodyBuffer := bytes.NewBuffer([]byte(body))

|

||||

request, _ := http.NewRequest(method, providerUrl, bodyBuffer)

|

||||

for k, v := range provider.Headers {

|

||||

request.Header.Set(k, v)

|

||||

}

|

||||

return request

|

||||

}

|

||||

|

||||

// Send a request to the alert provider and return the body

|

||||

func (provider *AlertProvider) Send(serviceName, alertDescription string, resolved bool) ([]byte, error) {

|

||||

request := provider.buildRequest(serviceName, alertDescription, resolved)

|

||||

response, err := client.GetHttpClient(false).Do(request)

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

if response.StatusCode > 399 {

|

||||

body, err := ioutil.ReadAll(response.Body)

|

||||

if err != nil {

|

||||

return nil, fmt.Errorf("call to provider alert returned status code %d", response.StatusCode)

|

||||

} else {

|

||||

return nil, fmt.Errorf("call to provider alert returned status code %d: %s", response.StatusCode, string(body))

|

||||

}

|

||||

}

|

||||

return ioutil.ReadAll(response.Body)

|

||||

}

|

||||

@@ -1,16 +1,27 @@

|

||||

package core

|

||||

package custom

|

||||

|

||||

import (

|

||||

"io/ioutil"

|

||||

"testing"

|

||||

)

|

||||

|

||||

func TestCustomAlertProvider_buildRequestWhenResolved(t *testing.T) {

|

||||

func TestAlertProvider_IsValid(t *testing.T) {

|

||||

invalidProvider := AlertProvider{Url: ""}

|

||||

if invalidProvider.IsValid() {

|

||||

t.Error("provider shouldn't have been valid")

|

||||

}

|

||||

validProvider := AlertProvider{Url: "http://example.com"}

|

||||

if !validProvider.IsValid() {

|

||||

t.Error("provider should've been valid")

|

||||

}

|

||||

}

|

||||

|

||||

func TestAlertProvider_buildRequestWhenResolved(t *testing.T) {

|

||||

const (

|

||||

ExpectedUrl = "http://example.com/service-name"

|

||||

ExpectedBody = "service-name,alert-description,RESOLVED"

|

||||

)

|

||||

customAlertProvider := &CustomAlertProvider{

|

||||

customAlertProvider := &AlertProvider{

|

||||

Url: "http://example.com/[SERVICE_NAME]",

|

||||

Method: "GET",

|

||||

Body: "[SERVICE_NAME],[ALERT_DESCRIPTION],[ALERT_TRIGGERED_OR_RESOLVED]",

|

||||

@@ -26,12 +37,12 @@ func TestCustomAlertProvider_buildRequestWhenResolved(t *testing.T) {

|

||||

}

|

||||

}

|

||||

|

||||

func TestCustomAlertProvider_buildRequestWhenTriggered(t *testing.T) {

|

||||

func TestAlertProvider_buildRequestWhenTriggered(t *testing.T) {

|

||||

const (

|

||||

ExpectedUrl = "http://example.com/service-name"

|

||||

ExpectedBody = "service-name,alert-description,TRIGGERED"

|

||||

)

|

||||

customAlertProvider := &CustomAlertProvider{

|

||||

customAlertProvider := &AlertProvider{

|

||||

Url: "http://example.com/[SERVICE_NAME]",

|

||||

Method: "GET",

|

||||

Body: "[SERVICE_NAME],[ALERT_DESCRIPTION],[ALERT_TRIGGERED_OR_RESOLVED]",

|

||||

48

alerting/provider/pagerduty/pagerduty.go

Normal file

48

alerting/provider/pagerduty/pagerduty.go

Normal file

@@ -0,0 +1,48 @@

|

||||

package pagerduty

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"github.com/TwinProduction/gatus/alerting/provider/custom"

|

||||

"github.com/TwinProduction/gatus/core"

|

||||

)

|

||||

|

||||

// AlertProvider is the configuration necessary for sending an alert using PagerDuty

|

||||

type AlertProvider struct {

|

||||

IntegrationKey string `yaml:"integration-key"`

|

||||

}

|

||||

|

||||

// IsValid returns whether the provider's configuration is valid

|

||||

func (provider *AlertProvider) IsValid() bool {

|

||||

return len(provider.IntegrationKey) == 32

|

||||

}

|

||||

|

||||

// https://developer.pagerduty.com/docs/events-api-v2/trigger-events/

|

||||

func (provider *AlertProvider) ToCustomAlertProvider(service *core.Service, alert *core.Alert, result *core.Result, resolved bool) *custom.AlertProvider {

|

||||

var message, eventAction, resolveKey string

|

||||

if resolved {

|

||||

message = fmt.Sprintf("RESOLVED: %s - %s", service.Name, alert.Description)

|

||||

eventAction = "resolve"

|

||||

resolveKey = alert.ResolveKey

|

||||

} else {

|

||||

message = fmt.Sprintf("TRIGGERED: %s - %s", service.Name, alert.Description)

|

||||

eventAction = "trigger"

|

||||

resolveKey = ""

|

||||

}

|

||||

return &custom.AlertProvider{

|

||||

Url: "https://events.pagerduty.com/v2/enqueue",

|

||||

Method: "POST",

|

||||

Body: fmt.Sprintf(`{

|

||||

"routing_key": "%s",

|

||||

"dedup_key": "%s",

|

||||

"event_action": "%s",

|

||||

"payload": {

|

||||

"summary": "%s",

|

||||

"source": "%s",

|

||||

"severity": "critical"

|

||||

}

|

||||

}`, provider.IntegrationKey, resolveKey, eventAction, message, service.Name),

|

||||

Headers: map[string]string{

|

||||

"Content-Type": "application/json",

|

||||

},

|

||||

}

|

||||

}

|

||||

14

alerting/provider/pagerduty/pagerduty_test.go

Normal file

14

alerting/provider/pagerduty/pagerduty_test.go

Normal file

@@ -0,0 +1,14 @@

|

||||

package pagerduty

|

||||

|

||||

import "testing"

|

||||

|

||||

func TestAlertProvider_IsValid(t *testing.T) {

|

||||

invalidProvider := AlertProvider{IntegrationKey: ""}

|

||||

if invalidProvider.IsValid() {

|

||||

t.Error("provider shouldn't have been valid")

|

||||

}

|

||||

validProvider := AlertProvider{IntegrationKey: "00000000000000000000000000000000"}

|

||||

if !validProvider.IsValid() {

|

||||

t.Error("provider should've been valid")

|

||||

}

|

||||

}

|

||||

15

alerting/provider/provider.go

Normal file

15

alerting/provider/provider.go

Normal file

@@ -0,0 +1,15 @@

|

||||

package provider

|

||||

|

||||

import (

|

||||

"github.com/TwinProduction/gatus/alerting/provider/custom"

|

||||

"github.com/TwinProduction/gatus/core"

|

||||

)

|

||||

|

||||

// AlertProvider is the interface that each providers should implement

|

||||

type AlertProvider interface {

|

||||

// IsValid returns whether the provider's configuration is valid

|

||||

IsValid() bool

|

||||

|

||||

// ToCustomAlertProvider converts the provider into a custom.AlertProvider

|

||||

ToCustomAlertProvider(service *core.Service, alert *core.Alert, result *core.Result, resolved bool) *custom.AlertProvider

|

||||

}

|

||||

63

alerting/provider/slack/slack.go

Normal file

63

alerting/provider/slack/slack.go

Normal file

@@ -0,0 +1,63 @@

|

||||

package slack

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"github.com/TwinProduction/gatus/alerting/provider/custom"

|

||||

"github.com/TwinProduction/gatus/core"

|

||||

)

|

||||

|

||||

// AlertProvider is the configuration necessary for sending an alert using Slack

|

||||

type AlertProvider struct {

|

||||

WebhookUrl string `yaml:"webhook-url"`

|

||||

}

|

||||

|

||||

// IsValid returns whether the provider's configuration is valid

|

||||

func (provider *AlertProvider) IsValid() bool {

|

||||

return len(provider.WebhookUrl) > 0

|

||||

}

|

||||

|

||||

// ToCustomAlertProvider converts the provider into a custom.AlertProvider

|

||||

func (provider *AlertProvider) ToCustomAlertProvider(service *core.Service, alert *core.Alert, result *core.Result, resolved bool) *custom.AlertProvider {

|

||||

var message string

|

||||

var color string

|

||||

if resolved {

|

||||

message = fmt.Sprintf("An alert for *%s* has been resolved after passing successfully %d time(s) in a row", service.Name, alert.SuccessThreshold)

|

||||

color = "#36A64F"

|

||||

} else {

|

||||

message = fmt.Sprintf("An alert for *%s* has been triggered due to having failed %d time(s) in a row", service.Name, alert.FailureThreshold)

|

||||

color = "#DD0000"

|

||||

}

|

||||

var results string

|

||||

for _, conditionResult := range result.ConditionResults {

|

||||

var prefix string

|

||||

if conditionResult.Success {

|

||||

prefix = ":heavy_check_mark:"

|

||||

} else {

|

||||

prefix = ":x:"

|

||||

}

|

||||

results += fmt.Sprintf("%s - `%s`\n", prefix, conditionResult.Condition)

|

||||

}

|

||||

return &custom.AlertProvider{

|

||||

Url: provider.WebhookUrl,

|

||||

Method: "POST",

|

||||

Body: fmt.Sprintf(`{

|

||||

"text": "",

|

||||

"attachments": [

|

||||

{

|

||||

"title": ":helmet_with_white_cross: Gatus",

|

||||

"text": "%s:\n> %s",

|

||||

"short": false,

|

||||

"color": "%s",

|

||||

"fields": [

|

||||

{

|

||||

"title": "Condition results",

|

||||

"value": "%s",

|

||||

"short": false

|

||||

}

|

||||

]

|

||||

},

|

||||

]

|

||||

}`, message, alert.Description, color, results),

|

||||

Headers: map[string]string{"Content-Type": "application/json"},

|

||||

}

|

||||

}

|

||||

14

alerting/provider/slack/slack_test.go

Normal file

14

alerting/provider/slack/slack_test.go

Normal file

@@ -0,0 +1,14 @@

|

||||

package slack

|

||||

|

||||

import "testing"

|

||||

|

||||

func TestAlertProvider_IsValid(t *testing.T) {

|

||||

invalidProvider := AlertProvider{WebhookUrl: ""}

|

||||

if invalidProvider.IsValid() {

|

||||

t.Error("provider shouldn't have been valid")

|

||||

}

|

||||

validProvider := AlertProvider{WebhookUrl: "http://example.com"}

|

||||

if !validProvider.IsValid() {

|

||||

t.Error("provider should've been valid")

|

||||

}

|

||||

}

|

||||

45

alerting/provider/twilio/twilio.go

Normal file

45

alerting/provider/twilio/twilio.go

Normal file

@@ -0,0 +1,45 @@

|

||||

package twilio

|

||||

|

||||

import (

|

||||

"encoding/base64"

|

||||

"fmt"

|

||||

"github.com/TwinProduction/gatus/alerting/provider/custom"

|

||||

"github.com/TwinProduction/gatus/core"

|

||||

"net/url"

|

||||

)

|

||||

|

||||

// AlertProvider is the configuration necessary for sending an alert using Twilio

|

||||

type AlertProvider struct {

|

||||

SID string `yaml:"sid"`

|

||||

Token string `yaml:"token"`

|

||||

From string `yaml:"from"`

|

||||

To string `yaml:"to"`

|

||||

}

|

||||

|

||||

// IsValid returns whether the provider's configuration is valid

|

||||

func (provider *AlertProvider) IsValid() bool {

|

||||

return len(provider.Token) > 0 && len(provider.SID) > 0 && len(provider.From) > 0 && len(provider.To) > 0

|

||||

}

|

||||

|

||||

// ToCustomAlertProvider converts the provider into a custom.AlertProvider

|

||||

func (provider *AlertProvider) ToCustomAlertProvider(service *core.Service, alert *core.Alert, result *core.Result, resolved bool) *custom.AlertProvider {

|

||||

var message string

|

||||

if resolved {

|

||||

message = fmt.Sprintf("RESOLVED: %s - %s", service.Name, alert.Description)

|

||||

} else {

|

||||

message = fmt.Sprintf("TRIGGERED: %s - %s", service.Name, alert.Description)

|

||||

}

|

||||

return &custom.AlertProvider{

|

||||

Url: fmt.Sprintf("https://api.twilio.com/2010-04-01/Accounts/%s/Messages.json", provider.SID),

|

||||

Method: "POST",

|

||||

Body: url.Values{

|

||||

"To": {provider.To},

|

||||

"From": {provider.From},

|

||||

"Body": {message},

|

||||

}.Encode(),

|

||||

Headers: map[string]string{

|

||||

"Content-Type": "application/x-www-form-urlencoded",

|

||||

"Authorization": fmt.Sprintf("Basic %s", base64.StdEncoding.EncodeToString([]byte(fmt.Sprintf("%s:%s", provider.SID, provider.Token)))),

|

||||

},

|

||||

}

|

||||

}

|

||||

19

alerting/provider/twilio/twilio_test.go

Normal file

19

alerting/provider/twilio/twilio_test.go

Normal file

@@ -0,0 +1,19 @@

|

||||

package twilio

|

||||

|

||||

import "testing"

|

||||

|

||||

func TestTwilioAlertProvider_IsValid(t *testing.T) {

|

||||

invalidProvider := AlertProvider{}

|

||||

if invalidProvider.IsValid() {

|

||||

t.Error("provider shouldn't have been valid")

|

||||

}

|

||||

validProvider := AlertProvider{

|

||||

SID: "1",

|

||||

Token: "1",

|

||||

From: "1",

|

||||

To: "1",

|

||||

}

|

||||

if !validProvider.IsValid() {

|

||||

t.Error("provider should've been valid")

|

||||

}

|

||||

}

|

||||

@@ -1,19 +1,45 @@

|

||||

package client

|

||||

|

||||

import (

|

||||

"crypto/tls"

|

||||

"net"

|

||||

"net/http"

|

||||

"time"

|

||||

)

|

||||

|

||||

var (

|

||||

client *http.Client

|

||||

secureHttpClient *http.Client

|

||||

insecureHttpClient *http.Client

|

||||

)

|

||||

|

||||

func GetHttpClient() *http.Client {

|

||||

if client == nil {

|

||||

client = &http.Client{

|

||||

Timeout: time.Second * 10,

|

||||

func GetHttpClient(insecure bool) *http.Client {

|

||||

if insecure {

|

||||

if insecureHttpClient == nil {

|

||||

insecureHttpClient = &http.Client{

|

||||

Timeout: time.Second * 10,

|

||||

Transport: &http.Transport{

|

||||

TLSClientConfig: &tls.Config{

|

||||

InsecureSkipVerify: true,

|

||||

},

|

||||

},

|

||||

}

|

||||

}

|

||||

return insecureHttpClient

|

||||

} else {

|

||||

if secureHttpClient == nil {

|

||||

secureHttpClient = &http.Client{

|

||||

Timeout: time.Second * 10,

|

||||

}

|

||||

}

|

||||

return secureHttpClient

|

||||

}

|

||||

return client

|

||||

}

|

||||

|

||||

func CanCreateConnectionToTcpService(address string) bool {

|

||||

conn, err := net.DialTimeout("tcp", address, 5*time.Second)

|

||||

if err != nil {

|

||||

return false

|

||||

}

|

||||

_ = conn.Close()

|

||||

return true

|

||||

}

|

||||

|

||||

28

client/client_test.go

Normal file

28

client/client_test.go

Normal file

@@ -0,0 +1,28 @@

|

||||

package client

|

||||

|

||||

import (

|

||||

"testing"

|

||||

)

|

||||

|

||||

func TestGetHttpClient(t *testing.T) {

|

||||

if secureHttpClient != nil {

|

||||

t.Error("secureHttpClient should've been nil since it hasn't been called a single time yet")

|

||||

}

|

||||

if insecureHttpClient != nil {

|

||||

t.Error("insecureHttpClient should've been nil since it hasn't been called a single time yet")

|

||||

}

|

||||

_ = GetHttpClient(false)

|

||||

if secureHttpClient == nil {

|

||||

t.Error("secureHttpClient shouldn't have been nil, since it has been called once")

|

||||

}

|

||||

if insecureHttpClient != nil {

|

||||

t.Error("insecureHttpClient should've been nil since it hasn't been called a single time yet")

|

||||

}

|

||||

_ = GetHttpClient(true)

|

||||

if secureHttpClient == nil {

|

||||

t.Error("secureHttpClient shouldn't have been nil, since it has been called once")

|

||||

}

|

||||

if insecureHttpClient == nil {

|

||||

t.Error("insecureHttpClient shouldn't have been nil, since it has been called once")

|

||||

}

|

||||

}

|

||||

@@ -1,15 +1,15 @@

|

||||

metrics: true

|

||||

services:

|

||||

- name: twinnation

|

||||

url: "https://twinnation.org/health"

|

||||

interval: 30s

|

||||

url: https://twinnation.org/health

|

||||

conditions:

|

||||

- "[STATUS] == 200"

|

||||

- "[BODY].status == UP"

|

||||

- "[RESPONSE_TIME] < 1000"

|

||||

- name: cat-fact

|

||||

interval: 1m

|

||||

url: "https://cat-fact.herokuapp.com/facts/random"

|

||||

interval: 1m

|

||||

conditions:

|

||||

- "[STATUS] == 200"

|

||||

- "[BODY].deleted == false"

|

||||

|

||||

@@ -2,6 +2,8 @@ package config

|

||||

|

||||

import (

|

||||

"errors"

|

||||

"github.com/TwinProduction/gatus/alerting"

|

||||

"github.com/TwinProduction/gatus/alerting/provider"

|

||||

"github.com/TwinProduction/gatus/core"

|

||||

"gopkg.in/yaml.v2"

|

||||

"io/ioutil"

|

||||

@@ -10,6 +12,8 @@ import (

|

||||

)

|

||||

|

||||

const (

|

||||

// DefaultConfigurationFilePath is the default path that will be used to search for the configuration file

|

||||

// if a custom path isn't configured through the GATUS_CONFIG_FILE environment variable

|

||||

DefaultConfigurationFilePath = "config/config.yaml"

|

||||

)

|

||||

|

||||

@@ -20,11 +24,12 @@ var (

|

||||

config *Config

|

||||

)

|

||||

|

||||

// Config is the main configuration structure

|

||||

type Config struct {

|

||||

Metrics bool `yaml:"metrics"`

|

||||

Debug bool `yaml:"debug"`

|

||||

Alerting *core.AlertingConfig `yaml:"alerting"`

|

||||

Services []*core.Service `yaml:"services"`

|

||||

Metrics bool `yaml:"metrics"`

|

||||

Debug bool `yaml:"debug"`

|

||||

Alerting *alerting.Config `yaml:"alerting"`

|

||||

Services []*core.Service `yaml:"services"`

|

||||

}

|

||||

|

||||

func Get() *Config {

|

||||

@@ -35,7 +40,7 @@ func Get() *Config {

|

||||

}

|

||||

|

||||

func Load(configFile string) error {

|

||||

log.Printf("[config][Load] Attempting to load config from configFile=%s", configFile)

|

||||

log.Printf("[config][Load] Reading configuration from configFile=%s", configFile)

|

||||

cfg, err := readConfigurationFile(configFile)

|

||||

if err != nil {

|

||||

if os.IsNotExist(err) {

|

||||

@@ -74,13 +79,79 @@ func parseAndValidateConfigBytes(yamlBytes []byte) (config *Config, err error) {

|

||||

// Parse configuration file

|

||||

err = yaml.Unmarshal(yamlBytes, &config)

|

||||

// Check if the configuration file at least has services.

|

||||

if config == nil || len(config.Services) == 0 {

|

||||

if config == nil || config.Services == nil || len(config.Services) == 0 {

|

||||

err = ErrNoServiceInConfig

|

||||

} else {

|

||||

// Set the default values if they aren't set

|

||||

for _, service := range config.Services {

|

||||

service.Validate()

|

||||

}

|

||||

validateAlertingConfig(config)

|

||||

validateServicesConfig(config)

|

||||

}

|

||||

return

|

||||

}

|

||||

|

||||

func validateServicesConfig(config *Config) {

|

||||

for _, service := range config.Services {

|

||||

if config.Debug {

|

||||

log.Printf("[config][validateServicesConfig] Validating service '%s'", service.Name)

|

||||

}

|

||||

service.ValidateAndSetDefaults()

|

||||

}

|

||||

log.Printf("[config][validateServicesConfig] Validated %d services", len(config.Services))

|

||||

}

|

||||

|

||||

func validateAlertingConfig(config *Config) {

|

||||

if config.Alerting == nil {

|

||||

log.Printf("[config][validateAlertingConfig] Alerting is not configured")

|

||||

return

|

||||

}

|

||||

alertTypes := []core.AlertType{

|

||||

core.SlackAlert,

|

||||

core.TwilioAlert,

|

||||

core.PagerDutyAlert,

|

||||

core.CustomAlert,

|

||||

}

|

||||

var validProviders, invalidProviders []core.AlertType

|

||||

for _, alertType := range alertTypes {

|

||||

alertProvider := GetAlertingProviderByAlertType(config, alertType)

|

||||

if alertProvider != nil {

|

||||

if alertProvider.IsValid() {

|

||||

validProviders = append(validProviders, alertType)

|

||||

} else {

|

||||

log.Printf("[config][validateAlertingConfig] Ignoring provider=%s because configuration is invalid", alertType)

|

||||

invalidProviders = append(invalidProviders, alertType)

|

||||

}

|

||||

} else {

|

||||

invalidProviders = append(invalidProviders, alertType)

|

||||

}

|

||||

}

|

||||

log.Printf("[config][validateAlertingConfig] configuredProviders=%s; ignoredProviders=%s", validProviders, invalidProviders)

|

||||

}

|

||||

|

||||

func GetAlertingProviderByAlertType(config *Config, alertType core.AlertType) provider.AlertProvider {

|

||||

switch alertType {

|

||||

case core.SlackAlert:

|

||||

if config.Alerting.Slack == nil {

|

||||

// Since we're returning an interface, we need to explicitly return nil, even if the provider itself is nil

|

||||

return nil

|

||||

}

|

||||

return config.Alerting.Slack

|

||||

case core.TwilioAlert:

|

||||

if config.Alerting.Twilio == nil {

|

||||

// Since we're returning an interface, we need to explicitly return nil, even if the provider itself is nil

|

||||

return nil

|

||||

}

|

||||

return config.Alerting.Twilio

|

||||

case core.PagerDutyAlert:

|

||||

if config.Alerting.PagerDuty == nil {

|

||||

// Since we're returning an interface, we need to explicitly return nil, even if the provider itself is nil

|

||||

return nil

|

||||

}

|

||||

return config.Alerting.PagerDuty

|

||||

case core.CustomAlert:

|

||||

if config.Alerting.Custom == nil {

|

||||

// Since we're returning an interface, we need to explicitly return nil, even if the provider itself is nil

|

||||

return nil

|

||||

}

|

||||

return config.Alerting.Custom

|

||||

}

|

||||

return nil

|

||||

}

|

||||

|

||||

@@ -1,7 +1,6 @@

|

||||

package config

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"github.com/TwinProduction/gatus/core"

|

||||

"testing"

|

||||

"time"

|

||||

@@ -36,7 +35,6 @@ services:

|

||||

if config.Services[1].Url != "https://api.github.com/healthz" {

|

||||

t.Errorf("URL should have been %s", "https://api.github.com/healthz")

|

||||

}

|

||||

fmt.Println(config.Services[0].Interval)

|

||||

if config.Services[0].Interval != 15*time.Second {

|

||||

t.Errorf("Interval should have been %s", 15*time.Second)

|

||||

}

|

||||

@@ -121,14 +119,17 @@ badconfig:

|

||||

func TestParseAndValidateConfigBytesWithAlerting(t *testing.T) {

|

||||

config, err := parseAndValidateConfigBytes([]byte(`

|

||||

alerting:

|

||||

slack: "http://example.com"

|

||||

slack:

|

||||

webhook-url: "http://example.com"

|

||||

pagerduty:

|

||||

integration-key: "00000000000000000000000000000000"

|

||||

services:

|

||||

- name: twinnation

|

||||

url: https://twinnation.org/actuator/health

|

||||

alerts:

|

||||

- type: slack

|

||||

enabled: true

|

||||

threshold: 7

|

||||

failure-threshold: 7

|

||||

description: "Healthcheck failed 7 times in a row"

|

||||

conditions:

|

||||

- "[STATUS] == 200"

|

||||

@@ -143,10 +144,19 @@ services:

|

||||

t.Error("Metrics should've been false by default")

|

||||

}

|

||||

if config.Alerting == nil {

|

||||

t.Fatal("config.AlertingConfig shouldn't have been nil")

|

||||

t.Fatal("config.Alerting shouldn't have been nil")

|

||||

}

|

||||

if config.Alerting.Slack != "http://example.com" {

|

||||

t.Errorf("Slack webhook should've been %s, but was %s", "http://example.com", config.Alerting.Slack)

|

||||

if config.Alerting.Slack == nil || !config.Alerting.Slack.IsValid() {

|

||||

t.Fatal("Slack alerting config should've been valid")

|

||||

}

|

||||

if config.Alerting.Slack.WebhookUrl != "http://example.com" {

|

||||

t.Errorf("Slack webhook should've been %s, but was %s", "http://example.com", config.Alerting.Slack.WebhookUrl)

|

||||

}

|

||||

if config.Alerting.PagerDuty == nil || !config.Alerting.PagerDuty.IsValid() {

|

||||

t.Fatal("PagerDuty alerting config should've been valid")

|

||||

}

|

||||

if config.Alerting.PagerDuty.IntegrationKey != "00000000000000000000000000000000" {

|

||||

t.Errorf("PagerDuty integration key should've been %s, but was %s", "00000000000000000000000000000000", config.Alerting.PagerDuty.IntegrationKey)

|

||||

}

|

||||

if len(config.Services) != 1 {

|

||||

t.Error("There should've been 1 service")

|

||||

@@ -166,8 +176,11 @@ services:

|

||||

if !config.Services[0].Alerts[0].Enabled {

|

||||

t.Error("The alert should've been enabled")

|

||||

}

|

||||

if config.Services[0].Alerts[0].Threshold != 7 {

|

||||

t.Errorf("The threshold of the alert should've been %d, but it was %d", 7, config.Services[0].Alerts[0].Threshold)

|

||||

if config.Services[0].Alerts[0].FailureThreshold != 7 {

|

||||

t.Errorf("The failure threshold of the alert should've been %d, but it was %d", 7, config.Services[0].Alerts[0].FailureThreshold)

|

||||

}

|

||||

if config.Services[0].Alerts[0].FailureThreshold != 7 {

|

||||

t.Errorf("The success threshold of the alert should've been %d, but it was %d", 2, config.Services[0].Alerts[0].SuccessThreshold)

|

||||

}

|

||||

if config.Services[0].Alerts[0].Type != core.SlackAlert {

|